The Grouparoo Blog

In today’s data-driven business world, organizations are looking for more efficient ways to leverage data from a variety of sources.

For example, businesses often need to evaluate their performance based on large volumes of customer and sales data that might be stored in a variety of locations and formats. Security and compliance teams need to monitor data from a wide array of devices and systems to detect threats as quickly as possible.

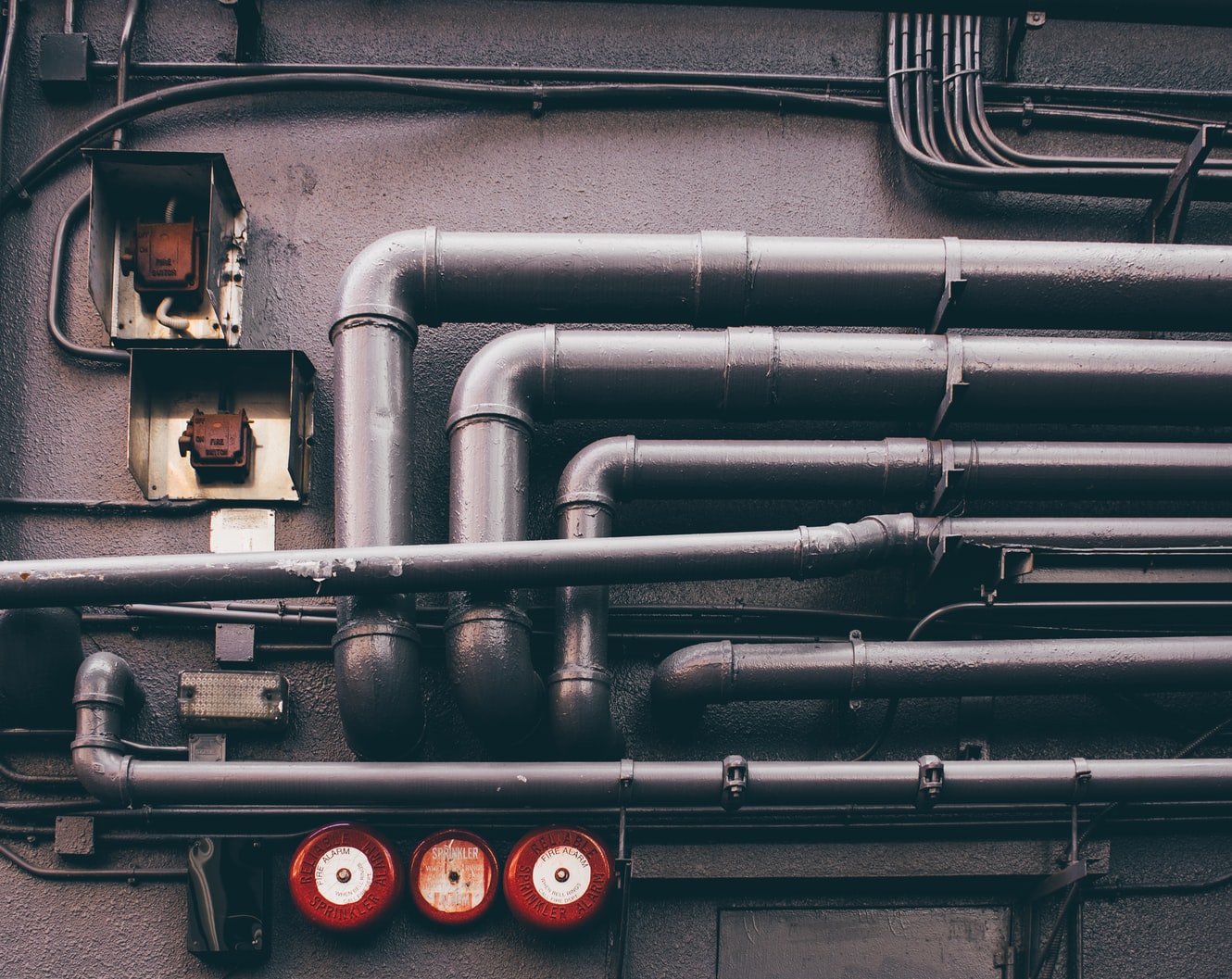

Such data is usually analyzed in a different environment from the source systems. As a result, data has to be moved between the source and destination systems and this is usually done with the aid of data pipelines.

What is a Data Pipeline?

A data pipeline is a set of processes that enable the movement and transformation of data from different sources to destinations. A data pipeline typically consists of three main elements: an origin, a set of processing steps, and a destination.

Data pipelines are key in enabling the efficient transfer of data between systems for data integration and other purposes. Let’s take a closer look at some of the major components of a data pipeline.

Origin

The origin of a data pipeline refers to the point of entry of data into the pipeline. This includes the different possible sources of data such as application APIs, social media, relational databases, IoT device sensors, and data lakes. This may include a data warehouse when it’s necessary to pipeline data from your warehouse to various destinations as in the case of a reverse ETL pipeline.

Destination

The destination is the final point to which the data is routed. Destinations can vary depending on the use case of the pipeline. For example, data can be transferred from a source into a data visualization application, an analytics database, or moved to a data warehouse. If your data started in the data warehouse, your destination may be your business tools such as Mailchimp, Zendesk, HubSpot, or Salesforce. Data pipelines could also be configured to route data back to the same source such that the pipeline only transforms the data set.

Dataflow

Dataflow refers to the flow of data from the ingestion sources to the destination. This process may include transformations that may modify the data set such as sorting, validation, standardization, deduplication, and verification.

Processing

Processing, which is closely related to dataflow, includes the steps involved in ingesting data from origin points, transforming it, storing it, and moving it to a destination. Processing can be done in batches when large volumes of data need to be moved at regular intervals or in real-time by streaming data.

Monitoring

Data pipelines typically need to be monitored to ensure that the process remains efficient and functional. As a result, they need to include mechanisms that alert engineers to potential failure scenarios such as network congestion or offline targets.

Workflow

Workflow refers to the sequence of tasks in a data pipe and their dependencies on one another. Such dependencies could either be business-oriented, such as the need for cross-verification of data across different sources, or technical.

Technology

A variety of tools and technologies can be used to implement various components of a data pipeline. The choice of tooling and infrastructure will depend on factors such as the organization’s size, budget, and industry as well as the types and use cases of the data.

Some common data pipeline tools include data warehouses, ETL tools, Reverse ETL tools, data lakes, batch workflow schedulers, data processing tools, and programming languages such as Python, Ruby, and Java.

Data Pipeline vs ETL

An ETL (Extract, Transform, and Load) system is a specific type of data pipeline that transforms and moves data across systems in batches. An ETL data pipeline extracts raw data from a source system, transforms it into a structure that can be processed by a target system, and loads the transformed data into the target, usually a database or data warehouse

While the terms “data pipeline” and ETL are often used interchangeably, there are some key differences between the two. Unlike traditional ETL systems, data pipelines don’t have to move data in batches. Data can be processed in real-time (or streamed) through a data pipeline. In addition, a data pipeline might not transform the data at all but simply move it across systems.

Thus, ETL systems are a subset of the broader term, “data pipeline”. ETL is one of the many approaches to data flow in a data pipeline. Presently, more companies are adopting a cutting-edge data pipelining approach known as reverse ETL.

Unlike regular ETL, reverse ETL moves data from a data warehouse to destinations such as CRM tools, and other SaaS applications. Reverse ETL tools such as Grouparoo enable data teams to perform operational analytics with the valuable data sitting in their data warehouses.

Types of Data Pipelines Solutions

Data pipelines can be categorized based on the type of analytics and workflows they support. Thus, the two main types of data pipelines are batch processing, which is used for traditional analytics, and streaming, which is used for real-time analytics.

Batch processing pipelines are used for periodically extracting, transforming, and feeding data into target systems in batches. These types of pipelines enable the exploration and complex analysis of big datasets of historical data. ETL pipelines fall under this category and are ideal for small sets of data that require complex transformations.

Streaming data pipelines, on the other hand, are ideal for scenarios where data needs to be processed in real-time. Stream processing data analysis pipelines are set up to constantly ingest and transform a flowing sequence of data while providing continuous updates to the target system.

Streaming data pipelines enable real-time analytics, allowing organizations to receive up-to-date information about essential operations and react immediately. They’re also generally lower cost and cheaper to maintain than batch processing pipelines. Streaming data pipeline examples include Yelp’s real-time data pipeline and Robinhood’s ELK stack.

Data Pipeline Implementation Options

Data pipelines can be implemented in-house or using cloud services. On-premises data pipelines are generally resource-intensive to set up and maintain. However, they offer full control over the data flowing through the pipeline.

Cloud-based data pipelines, on the other hand, are much easier to set up and less expensive to maintain. They also offer better scalability and much lower downtime risks. However, there is a danger of vendor lock-in as it can be difficult to switch providers. Additionally, certain businesses aren’t able to use cloud-based data pipelines if the data at question falls under regulatory requirements such as PII, PCI, or PHI.

Grouparoo, however, offers effective solutions to these challenges. Our hosted cloud offering features a free trial that enables businesses to evaluate the service without the risk of being locked in. We also have a free, open-source offering that can be self-hosted on a private cloud, allowing organizations to maintain full control over their data.

Conclusion

Data pipelines have become an invaluable part of the data stack of many enterprises today. They enable timely data analysis and easier access to business insights for a competitive advantage. With an effective data pipeline, you can ensure that your business benefits from useful data generated from a variety of sources.

Grouparoo is a modern reverse ETL data pipeline tool that enables you to leverage the data you already have in your data warehouse to make better-informed business decisions. It’s easy to set up and use and integrates with a wide selection of CRMs, data warehouses, databases, ad platforms, and SaaS marketing tools.

featured image via unsplash

Tagged in Data

See all of Micah Bello's posts.

Micah is a freelance writer and budding back-end developer with a love for all things software related. He spends his free time learning about the technologies that drive innovation in the software industry.

Learn more about Micah @ https://github.com/Micah-Bello

Get Started with Grouparoo

Start syncing your data with Grouparoo Cloud

Start Free TrialOr download and try our open source Community edition.